Advertisement

Data science continues to grow, and so does the sea of resources that support it. Among these, GitHub stands out as a reliable place to explore useful tools, real-world datasets, and working code. Whether you're just getting started or already knee-deep in machine learning projects, GitHub repositories offer ready-made resources to learn, build, and experiment. But with so many out there, it’s easy to get lost. So let’s narrow it down. Here’s a curated list of ten standout repositories that have become go-to references for learners and professionals alike.

This repository is a practical catalog of algorithms written in Python, with a focus on clarity over complexity. It includes everything from basic searching and sorting to dynamic programming and graph-based solutions. The code is clean, well-commented, and built with beginners in mind.

For learners trying to understand algorithm design through actual code rather than textbook definitions, this one checks the boxes. Each algorithm is in its own file, accompanied by explanations and, often, a link to the corresponding Wikipedia page. It’s not just useful; it’s straightforward.

Built on top of PyTorch, this library simplifies deep learning tasks without sacrificing control. The creators didn’t just throw together a tool—they built a learning framework. Every design choice centers around making models faster to train, easier to read, and more intuitive to understand.

It's especially helpful for those who want to get into deep learning without writing boilerplate code. You can load datasets, preprocess data, and train state-of-the-art models in just a few lines. Also worth noting is that the documentation reads like a mini-course in itself.

No list like this would be complete without Scikit-learn. It’s one of the oldest and most trusted libraries in the field, offering a full suite of tools for data mining, analysis, and modeling.

The real value, though, lies in its simplicity. Its API is so consistent across modules that switching from linear regression to random forests feels seamless. And with a massive collection of well-documented examples, this one serves as both a tool and a tutor.

Pandas is the lifeblood of data manipulation in Python. It's not flashy, but it's powerful. Once you get used to its DataFrames and chaining style, working with data becomes far less painful.

This repo isn't just for those looking to use Pandas in a project. It’s also helpful for those trying to understand why Pandas behaves the way it does. The issues tab and ongoing discussions give you a peek into its inner workings.

This isn’t a tool or a library—it’s a collection. A curated list of books, tutorials, libraries, newsletters, podcasts, and online courses. If you’re new and looking for direction, this is where you’ll find it.

What makes it stand out is the range. It doesn't just focus on machine learning or Python; it spans the full stack of data science, from statistics and data engineering to career advice and interview prep.

Getting ready for an interview? This repository can help. It compiles common questions from top tech companies, covering theory, code, and applied problems. From SQL queries to A/B testing logic, it touches all the essentials.

Each topic includes examples and answers, making it easy to study without bouncing between tabs. It’s not just about getting the right answer, but understanding how to explain it well.

This one feels more like a structured course than a collection of files. Made With ML offers end-to-end projects, walking you through everything from problem definition to model deployment.

What sets it apart is its focus on production. You’re not just building models—you’re learning how to get them into the real world. It brings in tools like MLflow, Docker, and AWS to help you understand how to scale and monitor your models.

As the name suggests, this repo challenges you to code something related to machine learning every day for 100 days. It’s structured, motivating, and includes lots of useful links, explanations, and small tasks.

Unlike other long-term challenges that can feel vague, this one is nicely broken down. Each day has a topic, and many days include summaries, helpful resources, and practice tasks.

If you're already using TensorFlow or planning to, this repo is a must. It houses the official models developed by the TensorFlow team, including object detection systems, language models, and recommendation engines.

Everything here is meant to be production-ready, so the code quality and documentation are both top-tier. Whether you want to fine-tune a BERT model or experiment with image segmentation, this is where to look.

Based on the famous Andrew Ng course, this repo contains the programming assignments and notes from learners who’ve gone through the material. It’s especially helpful if you’re struggling with the math or implementation details.

Many of the solutions are written in both Octave and Python, so you can compare how logic translates between languages. It’s not officially endorsed, but for learners, it’s a useful way to cross-reference your work.

There’s no shortage of data science material out there, but not all of it is worth your time. The ten repositories above are popular for a reason—they make learning practical and help break down complex ideas into workable pieces. Whether you’re refining your code, prepping for interviews, or building your first end-to-end project, these repos offer a solid foundation without overwhelming you. You don’t have to follow all ten at once. Start with one that fits your current goals, and move forward from there. Keep exploring, stay consistent, and let curiosity drive your progress.

Advertisement

Looking for practical data science tools? Explore ten standout GitHub repositories—from algorithms and frameworks to real-world projects—that help you build, learn, and grow faster in ML

Learn how to build scalable systems using Apache Airflow—from setting up environments and writing DAGs to adding alerts, monitoring pipelines, and avoiding reliability pitfalls

A detailed look at training CodeParrot from scratch, including dataset selection, model architecture, and its role as a Python-focused code generation model

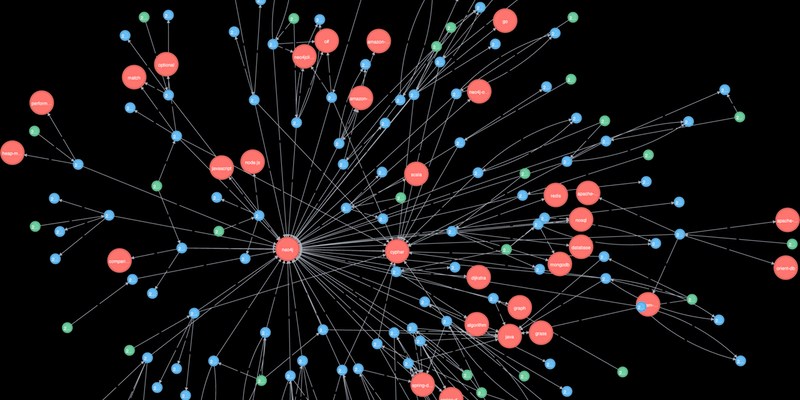

Explore how Neo4j uses graph structures to efficiently model relationships in social networks, fraud detection, recommendation systems, and IT operations—plus a practical setup guide

Learn how Apache Oozie coordinates Hadoop jobs with XML workflows, time-based triggers, and clean orchestration. Ideal for production-ready data pipelines and complex ETL chains

How Q-learning works in real environments, from action selection to convergence. Understand the key elements that shape Q-learning and its role in reinforcement learning tasks

Curious what’s really shaping AI and tech today? See how DataHour captures real tools, honest lessons, and practical insights from the frontlines of modern data work—fast, clear, and worth your time

Discover lesser-known Pandas functions that can improve your data manipulation skills in 2025, from query() for cleaner filtering to explode() for flattening lists in columns

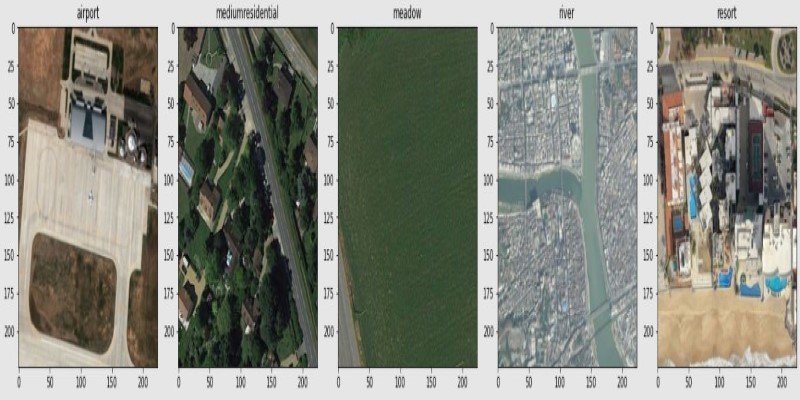

How fine-tuning CLIP with satellite data improves its performance in interpreting remote sensing images and captions for tasks like land use mapping and disaster monitoring

How a course launch community event can boost engagement, create meaningful interaction, and shape a stronger learning experience before the course even starts

Explore how data quality impacts machine learning outcomes. Learn to assess accuracy, consistency, completeness, and timeliness—and why clean data leads to better, more stable models

How do we keep digital research accessible and citable over time? Learn how assigning DOIs to datasets and models supports transparency, reproducibility, and proper credit in modern research