Advertisement

There’s something satisfying about being tuned into what’s genuinely driving change right now. Whether it’s a tool gaining traction or a trend reshaping industries, staying informed feels less like catching up and more like keeping pace. That’s where DataHour shines. It’s not just a lineup of talks—it’s a sharp, time-conscious series that delivers what’s current in data, AI, and tech. What sets it apart is the way it blends practical insight with real-world context. Speakers don’t just present ideas—they share what they’ve actually done, what worked, and what didn’t. The tone stays easygoing, but the takeaways stick with you.

What’s interesting about DataHour is that it doesn’t just skim the surface. Tools are introduced with context—who’s using them, what they solve, and why they’re gaining attention. You’re not just hearing names; you’re getting examples.

LangChain has picked up steam, especially among those building with large language models. At DataHour, it gets treated not as hype, but as a practical answer to a common question: how do you chain model outputs into something useful? From document search to custom agents, speakers break it down clearly. On the side, there’s growing attention toward tools that support LLMOps—think prompt versioning, observability, and deployment workflows. It’s the kind of behind-the-scenes detail that separates building from building smart.

Speed has always mattered, but lately, the priority seems to be how quickly you can go from an idea to a working demo. Streamlit continues to shine in that space, especially when paired with FastAPI. DataHour sessions often feature live builds, which makes all the difference. Seeing someone push code, tweak inputs, and explain their choices as they go? You get clarity fast.

As much as everyone’s excited about performance, explainability is back in the spotlight. Tools like SHAP and LIME aren't new, but they're getting fresh attention now that more non-technical teams are reading the outputs. A few sessions were spent walking through examples where models were adjusted based entirely on how easy their results were to explain to stakeholders. That shift toward transparency and collaboration feels long overdue.

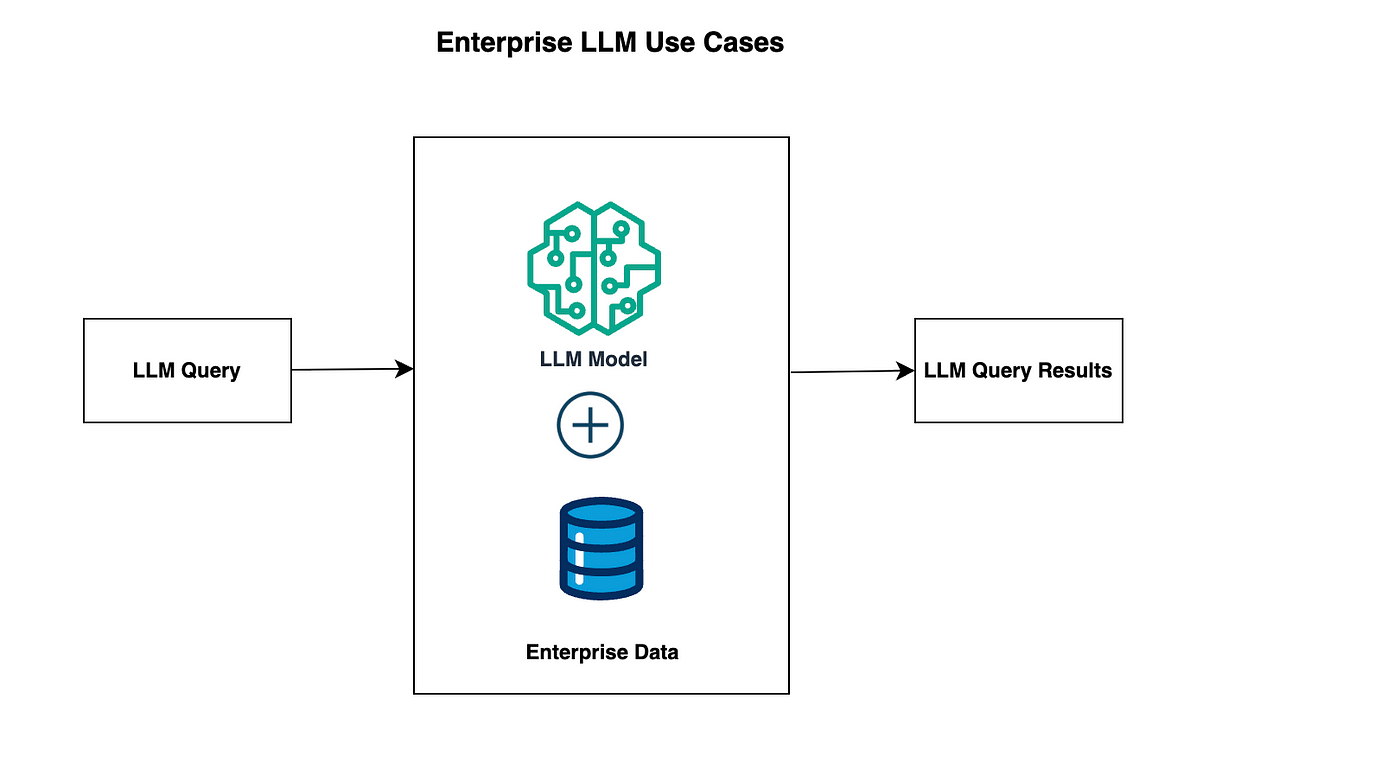

With large language models now part of the daily workflow for many teams, vector databases like FAISS, Pinecone, and Weaviate are seeing more traction. DataHour sessions haven’t just showcased the theory; they’ve demoed actual pipelines where context gets retrieved in real-time. You can almost feel the gears turning as the speaker walks through their setup: an input, a quick query across stored vectors, and then a response that feels far more aware than a standalone model could manage.

DataHour isn't just about what's trending—it's also about figuring out what's here to stay. These themes aren't fleeting. They've come across sessions, speakers, and industries, which says a lot.

More teams are solving problems while still figuring out the tools. That sounds messy, but it's honest—and surprisingly effective. Instead of waiting to be "ready," developers and analysts alike are jumping in, learning while shipping. Speakers often talk about their process in the present tense, not as a postmortem. That mindset shift—treating uncertainty as part of the process—keeps things fresh and keeps projects moving.

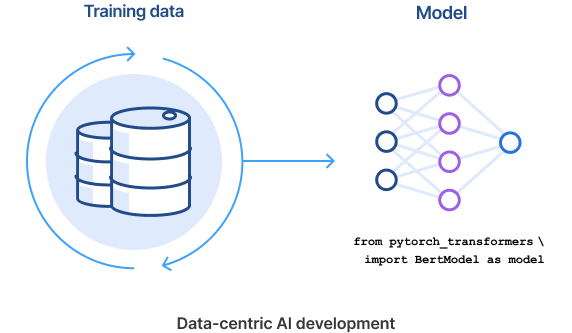

This one has been bubbling for a while, but it’s becoming more central now. Instead of obsessing over bigger, deeper models, more teams are asking: “What’s the quality of the data going in?” DataHour sessions have showcased real-world examples where better labeling, thoughtful sampling, or creative augmentation outperformed a model upgrade. It's a quiet trend, but an impactful one.

You’ll notice something if you attend a few sessions: the speaker’s title often doesn’t match what they’re doing. Data scientists are writing production code. Engineers are training models. Analysts are working with APIs. The lines are blurry—and that’s okay. It reflects the tools getting easier and the workflows getting more collaborative. You don’t need five teams for one project; you need curiosity and a bit of overlap.

Whether it’s bias, fairness, or the social impact of deployed models, discussions around ethics are more direct now. Instead of saving it for the Q&A, many speakers build it into their sessions. You’ll hear phrases like “we adjusted the model after seeing the output skew” or “we stopped this project because it wasn’t justifiable.” Those aren’t afterthoughts—they’re decision points. And the fact that they’re being shared openly? That’s progress.

It’s not a passive listen-and-learn format. If you want to actually get value out of each session, a few simple steps help it click.

Before jumping into a session, take a moment to decide what you want out of it. Are you looking to apply for something this week? Just exploring? That bit of intention makes it easier to follow along and know which parts to jot down.

Recordings are great, but something about the live format changes the energy. You can ask questions, spot patterns in what others are curious about, and even get direct input from speakers. Plus, you’ll often get bonus tips that don’t make it into the slides.

Rather than scribbling everything, take screenshots of code snippets or use tools that let you clip parts of the screen. Visual memory sticks better, especially when you’re trying to replicate something later.

Whether it's with teammates, on social media, or just a quick note to yourself, sharing what you learned helps solidify it. Plus, it opens the door for others to weigh in or share related resources.

Doesn’t have to be a full project—just one line of code, one new tool installed, or one concept reviewed. Waiting too long turns interest into noise. Even five minutes of action makes the learning feel more real.

DataHour isn’t trying to be flashy. It’s doing something better—it’s keeping things real. The format respects your time. The sessions respect your intelligence. And the speakers, more often than not, share lessons that are still in progress, which makes them more honest than polished.

If you’re even slightly curious about where things are headed in tech, data, or AI, it’s worth tuning in. Not to “stay ahead” or “keep up,” but because it’s refreshing to hear smart people talk about what they’re actually working on, in plain terms. And that? That’s rare.

Advertisement

Curious about how to start your first machine learning project? This beginner-friendly guide walks you through choosing a topic, preparing data, selecting a model, and testing your results in plain language

How the Annotated Diffusion Model transforms the image generation process with transparency and precision. Learn how this AI technique reveals each step of creation in clear, annotated detail

How do we keep digital research accessible and citable over time? Learn how assigning DOIs to datasets and models supports transparency, reproducibility, and proper credit in modern research

How Q-learning works in real environments, from action selection to convergence. Understand the key elements that shape Q-learning and its role in reinforcement learning tasks

How a course launch community event can boost engagement, create meaningful interaction, and shape a stronger learning experience before the course even starts

Curious why developers are switching from Solidity to Vyper? Learn how Vyper simplifies smart contract development by focusing on safety, predictability, and auditability—plus how to set it up locally

A detailed look at training CodeParrot from scratch, including dataset selection, model architecture, and its role as a Python-focused code generation model

Explore how Google Cloud Platform (GCP) powers scalable, efficient, and secure applications in 2025. Learn why developers choose GCP for data analytics, app development, and cloud infrastructure

Learn how to build scalable systems using Apache Airflow—from setting up environments and writing DAGs to adding alerts, monitoring pipelines, and avoiding reliability pitfalls

New to YARN? Learn how YARN manages resources in Hadoop clusters, improves performance, and keeps big data jobs running smoothly—even on a local setup. Ideal for beginners and data engineers

Learn how Apache Oozie coordinates Hadoop jobs with XML workflows, time-based triggers, and clean orchestration. Ideal for production-ready data pipelines and complex ETL chains

Learn the full process of deploying ViT on Vertex AI for scalable and efficient image classification. Discover how to prepare, containerize, and serve Vision Transformer models in production