Advertisement

Margaret Mitchell is one of the most recognizable voices in machine learning, not because she builds the biggest models but because she asks the most grounded questions. In a field racing toward faster, smarter AI, she slows things down to ask who benefits, who's left out, and what the long-term consequences might be.

While many focus purely on performance, Mitchell has spent her career putting people back at the centre of the conversation. She doesn't treat ethics as a side project. For her, it's a foundation. This approach has made her stand out among today's most thoughtful machine-learning experts.

Mitchell started her career in academia, exploring how humans and computers interact through language. Her early research touched on meaning, miscommunication, and intent — ideas that would later shape her views on responsible AI. With a Ph.D. in computer science, she focused on natural language processing and human-centered machine learning, long before these became common themes in mainstream research.

Her move into the industry gave her a new platform. At Google, she co-led the Ethical AI team, pushing the conversation beyond technical benchmarks. She helped create internal tools to examine bias, data imbalance, and unintended outcomes in machine learning systems. Her aim was not just high performance but high accountability.

Mitchell believed that transparency in how models are trained, evaluated, and used was as important as accuracy. She helped introduce model documentation practices that showed what data was used, where models might fail, and who might be affected. These tools weren’t just meant for engineers. They were created for anyone trying to understand what a machine learning model really does.

During her time at Google, Mitchell often found herself at odds with the structure of corporate AI development. Her departure from the company, following internal conflict, made headlines, but it also sparked broader conversations about ethics in AI. It highlighted how difficult it can be to challenge systems from within — and why voices like hers are so important.

Fairness, for Mitchell, is not a checkbox. It's a method, a process, and a mindset. She has spent years urging researchers and developers to think critically about every step in building a machine learning system. From data collection to deployment, she asks whether the system could replicate existing harms or unfairly exclude people.

One of her best-known contributions is the concept of “Model Cards,” a framework for documenting machine learning models in a clear, consistent way. Much like a product label, these cards explain what a model is designed for, how it was trained, and where it might struggle. This kind of transparency helps reduce the mystery around AI systems and puts some of the responsibility back in human hands.

She's also spoken extensively about bias — how it starts with data and gets embedded in models. Mitchell doesn't present bias as an accidental glitch but as something that must be actively identified and addressed. Her work challenges the assumption that technical decisions are neutral.

While some in the field still view ethics as a soft topic, Mitchell treats it as a core infrastructure. Her perspective insists that technical design must account for human experience. She's shown that doing so doesn't weaken machine learning — it makes it more relevant and trustworthy.

Mitchell is known not just for her research but for her ability to communicate. She speaks in a clear, unpretentious way that makes complex topics easier to engage with. She doesn’t hide behind jargon. This has made her a go-to figure for anyone trying to understand the social dimensions of AI.

She regularly collaborates with people outside the tech world — from social scientists to journalists. Her projects often involve mixed teams with diverse viewpoints, and she encourages this kind of collaboration across the field. It's not just about bringing in different skill sets but different lived experiences. Mitchell has argued that without this kind of diversity, AI can't be truly fair.

Her influence can be seen in how younger researchers approach the field. Many now consider fairness and accountability part of the technical conversation, not an afterthought. Mitchell’s public presence, through talks, writing, and online discussions, has played a big role in that shift.

She’s also helped make room for dissent. In a fast-paced industry that often discourages criticism, she’s shown that asking hard questions is not a weakness. It’s necessary for building systems that reflect real human needs.

Mitchell continues to work on ways to evaluate machine learning systems more thoroughly. She’s interested in tools that look at long-term outcomes and social impact, not just test accuracy. Her focus is on making sure AI behaves responsibly at scale — before harm happens, not after.

She's also involved in mentoring and research that supports responsible innovation. Whether working with startups, nonprofits, or universities, Mitchell stays committed to helping others carry forward her approach. She remains vocal about the need for clear guardrails in AI development, particularly in large-scale deployments, such as language models and vision systems.

Her approach is simple but firm: if AI is going to be part of decision-making systems that affect people’s lives, it needs to be held to a higher standard. That means better oversight, better documentation, and more openness.

Margaret Mitchell's work stands out not just for its technical quality but for its moral clarity. She reminds us that AI doesn't exist in a vacuum. It reflects the values — or the blind spots — of the people who build it.

Margaret Mitchell has spent her career making sure AI is not just smarter but more honest and fair. In a space filled with complex systems and ambitious goals, she brings the focus back to people — those who use the technology and those affected by it. Her work challenges assumptions, asks inconvenient questions, and gives the field better tools for making AI safer and more transparent. Among today’s machine learning experts, she offers a perspective rooted in responsibility, not just performance. As AI becomes a bigger part of daily life, Mitchell’s voice continues to shape how we build with care, not just speed.

Advertisement

How Q-learning works in real environments, from action selection to convergence. Understand the key elements that shape Q-learning and its role in reinforcement learning tasks

Should credit risk models focus on pure accuracy or human clarity? Explore why Explainable AI is vital in financial decisions, balancing trust, regulation, and performance in credit modeling

How Margaret Mitchell, one of the most respected machine learning experts, is transforming the field with her commitment to ethical AI and human-centered innovation

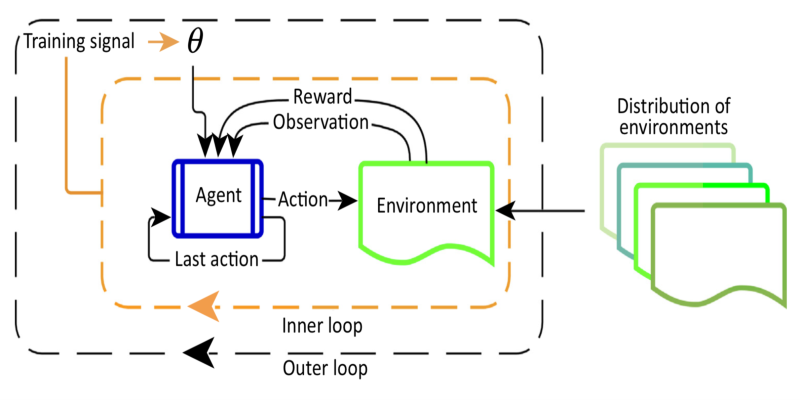

Curious about Meta-RL? Learn how meta-reinforcement learning helps data science systems adapt faster, use fewer samples, and evolve smarter—without retraining from scratch every time

How do we keep digital research accessible and citable over time? Learn how assigning DOIs to datasets and models supports transparency, reproducibility, and proper credit in modern research

Learn how to build scalable systems using Apache Airflow—from setting up environments and writing DAGs to adding alerts, monitoring pipelines, and avoiding reliability pitfalls

How a course launch community event can boost engagement, create meaningful interaction, and shape a stronger learning experience before the course even starts

Learn the full process of deploying ViT on Vertex AI for scalable and efficient image classification. Discover how to prepare, containerize, and serve Vision Transformer models in production

Confused about the difference between a data lake and a data warehouse? Discover how they compare, where each shines, and how to choose the right one for your team

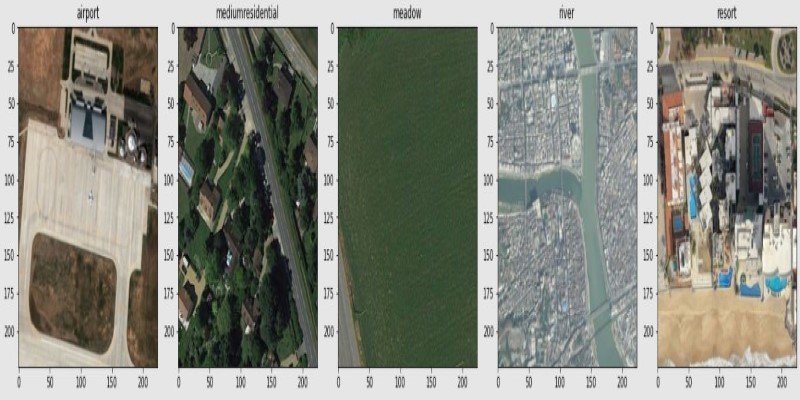

How fine-tuning CLIP with satellite data improves its performance in interpreting remote sensing images and captions for tasks like land use mapping and disaster monitoring

Thinking of moving to the cloud? Discover seven clear reasons why businesses are choosing Google Cloud Platform—from seamless scaling and strong security to smarter collaboration and cost control

Confused about MLOps? Learn how MLflow makes machine learning deployment, versioning, and collaboration easier with real-world workflows for tracking, packaging, and serving models