Advertisement

The Annotated Diffusion Model isn't just another AI method—it's a new way to understand how machine learning creates images from scratch. Instead of treating the process like a mystery, this model adds explanations at every step, showing how images evolve over generations. It provides researchers and developers with a clearer understanding of what the model is doing and why. That visibility leads to smarter systems, better tools, and fewer surprises. In a space where transparency is often lacking, this approach helps make AI decisions easier to trust and improve.

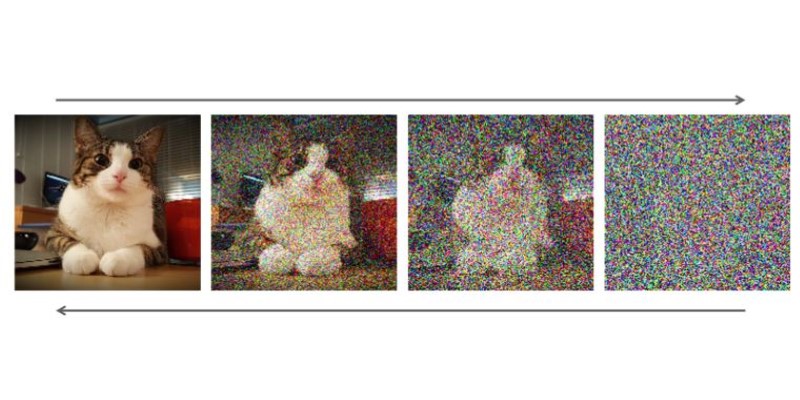

The Annotated Diffusion Model builds on the idea of diffusion processes, where an image is turned into noise and then rebuilt. Standard diffusion models are trained to reverse this noise process step by step, learning how to create a clean image from randomness. The annotated version adds something extra: a series of interpretive layers that show what’s happening at each point in the transformation.

Rather than just spitting out a final image, it provides insights along the way—snapshots, attention weights, feature maps—making the process more transparent. You can see how the model forms structure out of noise, where it places focus, and what features it prioritizes. These annotations act as a kind of commentary track, helping users understand how the system reached its output.

By making each stage readable, this approach supports better debugging, learning, and insight. It’s a practical shift for anyone building or fine-tuning these systems, and it offers a deeper look at how machine learning creates meaning from randomness.

Diffusion models operate through a series of timesteps. During training, they gradually add noise to images. During generation, they start with noise and learn to subtract it in stages. The Annotated Diffusion Model keeps this framework but adds layers that capture what the model is doing at each step.

Suppose the model is generating an image of a tree. Early on, there’s just blurry noise. By mid-stage, the trunk might begin to form. Later, branches and leaves emerge. With annotations, each phase includes details like which parts of the image the model is attending to, what features are solidifying, and how decisions shift over time.

This is made possible through attention maps and internal feature visualizations. Attention maps show where the model is focusing—useful for identifying which parts of the noise it's treating as a signal. Feature maps highlight the shapes and textures being developed at each layer. Gradients track how each input influences the result.

The benefit is twofold: a clearer understanding of how images come together and better control over how models behave. With visibility into how shapes and forms are decided, developers can tweak parts of the model more precisely rather than making broad changes and hoping for improvement.

The Annotated Diffusion Model goes beyond generating images—it changes how we interact with AI systems. Traditional models often work like black boxes: you feed in input, get an output, and have no clear path from one to the other. Annotated diffusion changes that by opening up the process.

This added transparency helps researchers catch issues early. If a model repeatedly misinterprets certain patterns, annotations can show exactly where the problem starts. You’re not just guessing why something went wrong—you can trace the steps and see where the misunderstanding began.

It’s also valuable in high-stakes applications. In areas like healthcare, design, or science, knowing why an AI chose one feature over another is often just as important as the final result. With annotation, the decision process becomes visible and reviewable.

Imagine a model helping to reconstruct a damaged medical scan. An annotated model won’t just enhance the image—it will show how it interpreted tissue boundaries and where it focused its attention during reconstruction. That gives doctors more to work with and helps build confidence in the tool.

For learning and education, it’s equally useful. Students and early-career researchers benefit from seeing how a complex model reaches its conclusions. It turns theory into something you can watch in action.

As AI becomes part of more systems, its decisions need to be easier to understand. The Annotated Diffusion Model fits into a broader shift toward models that explain themselves. This isn’t just about making AI nicer to use—it's about building trust and accountability in how it works.

So far, most annotation work is happening in research labs, but there's growing interest in bringing it to user-facing tools. A design app that shows how each suggestion was generated, or a security system that explains its detections step-by-step could use annotated diffusion models to great effect.

There are challenges. Adding annotations takes more memory and processing power. The data they produce also needs to be managed and interpreted correctly. But those trade-offs bring something valuable: models that are easier to guide, correct, and understand.

As the technique improves, it may become standard in more areas of machine learning. Whether used to teach, troubleshoot, or verify, annotated models offer a better way to interact with AI systems—especially those that shape what we see, build, or decide.

The Annotated Diffusion Model doesn't just make images—it shows its work. That makes a big difference in a field where most decisions happen behind closed doors. By revealing how AI sees patterns, shapes, and structures as it builds an image, it makes the system more understandable and useful. Whether used in research, product development, or education, this model creates space for better interaction between humans and machines. As AI becomes more integrated into daily tools and serious decisions, approaches like this will help ensure that what machines do—and why they do it—isn't hidden in the dark.

Advertisement

How does HDFS handle terabytes of data without breaking a sweat? Learn how this powerful distributed file system stores, retrieves, and safeguards your data across multiple machines

Is your team using AI tools you don’t know about? Shadow AI is growing inside companies fast—learn how to manage it without stifling innovation or exposing your data

How Hugging Face for Education makes AI accessible through user-friendly machine learning models, helping students and teachers explore natural language processing in AI education

Discover lesser-known Pandas functions that can improve your data manipulation skills in 2025, from query() for cleaner filtering to explode() for flattening lists in columns

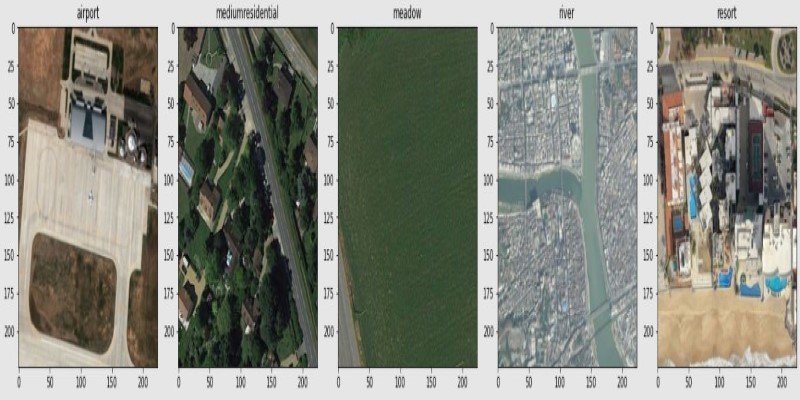

How fine-tuning CLIP with satellite data improves its performance in interpreting remote sensing images and captions for tasks like land use mapping and disaster monitoring

Learn the full process of deploying ViT on Vertex AI for scalable and efficient image classification. Discover how to prepare, containerize, and serve Vision Transformer models in production

Learn how to build scalable systems using Apache Airflow—from setting up environments and writing DAGs to adding alerts, monitoring pipelines, and avoiding reliability pitfalls

How a course launch community event can boost engagement, create meaningful interaction, and shape a stronger learning experience before the course even starts

New to YARN? Learn how YARN manages resources in Hadoop clusters, improves performance, and keeps big data jobs running smoothly—even on a local setup. Ideal for beginners and data engineers

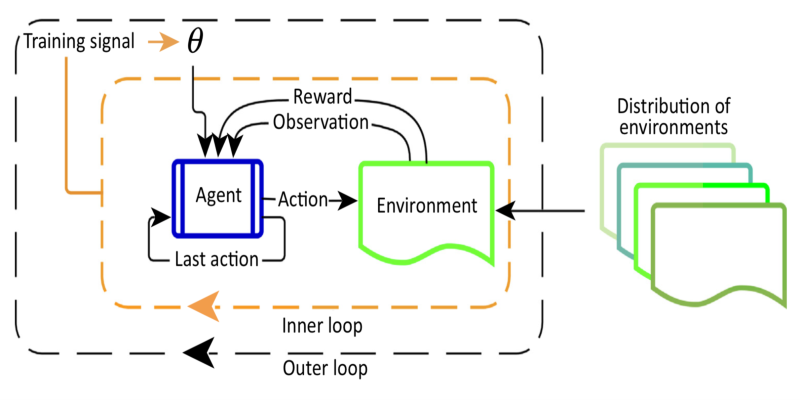

Curious about Meta-RL? Learn how meta-reinforcement learning helps data science systems adapt faster, use fewer samples, and evolve smarter—without retraining from scratch every time

How Margaret Mitchell, one of the most respected machine learning experts, is transforming the field with her commitment to ethical AI and human-centered innovation

Curious why developers are switching from Solidity to Vyper? Learn how Vyper simplifies smart contract development by focusing on safety, predictability, and auditability—plus how to set it up locally